Google and Others Are Building AI Systems That Doubt Themselves

The most powerful approach in AI, deep learning, is gaining a new capability: a sense of uncertainty.

Researchers at Uber and Google are working on modifications to the two most popular deep-learning frameworks that will enable them to handle probability. This will provide a way for the smartest AI programs to measure their confidence in a prediction or a decision—essentially, to know when they should doubt themselves.

Deep learning, which involves feeding example data to a large and powerful neural network, has been an enormous success over the past few years, enabling machines to recognize objects in images or transcribe speech almost perfectly. But it requires lots of training data and computing power, and it can be surprisingly brittle.

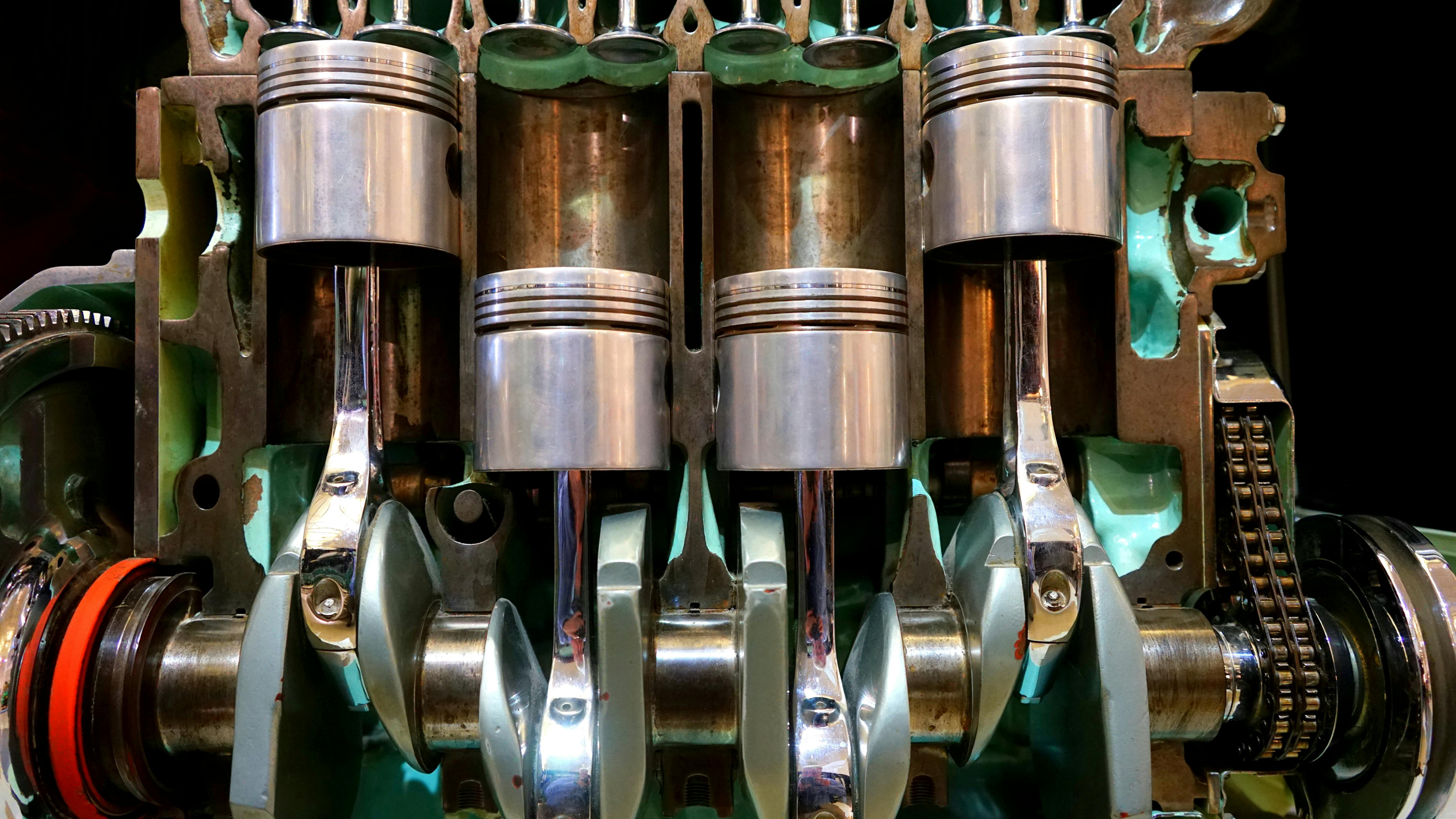

Somewhat counterintuitively, this self-doubt offers one fix. The new approach could be useful in critical scenarios involving self-driving cars and other autonomous machines.

“You would like a system that gives you a measure of how certain it is,” says Dustin Tran, who is working on this problem at Google. “If a self-driving car doesn’t know its level of uncertainty, it can make a fatal error, and that can be catastrophic.”

The work reflects the realization that uncertainty is a key aspect of human reasoning and intelligence. Adding it to AI programs could make them smarter and less prone to blunders, says Zoubin Ghahramani, a prominent AI researcher who is a professor at the University of Cambridge and chief scientist at Uber.

This may prove vitally important as AI systems are used in ever more critical scenarios. “We want to have a rock-solid framework for deep learning, but make it easier for people to represent uncertainty,” Ghahramani told me recently over coffee one morning during a major AI conference in Long Beach, California.

During the same AI conference, a group of researchers gathered at a nearby bar one afternoon to discuss Pyro, a new programming language released by Uber that merges deep learning with probabilistic programming.

The meetup in Long Beach was organized by Noah Goodman, a professor at Stanford who is also affiliated with Uber’s AI Lab. With curly, unkempt hair and an unbuttoned shirt, he could easily be mistaken for a yoga teacher rather than an AI expert. Among those at the gathering was Tran, who has also contributed to the development of Pyro.

Goodman explains that giving deep learning the ability to handle probability can make it smarter in several ways. It could, for instance, help a program recognize things, with a reasonable degree of certainty, from just a few examples rather than many thousands. Offering a measure of certainty rather than a yes-or-no answer should also help with engineering complex systems.

And while a conventional deep-learning system learns only from the data it is fed, Pyro can also be used to build a system preprogrammed with knowledge. This could be useful in just about any scenario where machine learning might currently turn up.

“In cases where you have prior knowledge you want to build into the model, probabilistic programming is especially useful, ” Goodman says. “People will use Pyro for all sorts of things.”

Edward is another programming language that embraces uncertainty, this one developed at Columbia University with funding from DARPA. Both Pyro and Edward are still at early stages of development, but it isn’t hard to see why Uber and Google are interested.

Uber uses machine learning in countless areas, from routing drivers to setting surge pricing, and of course in its self-driving cars. The company has invested heavily in AI, hiring a number of experts working on new ideas. Google has rebuilt its entire business around AI and deep learning of late.

David Blei, a professor of statistics and computer science at Columbia University and Tran’s advisor, says combining deep learning and probabilistic programming is a promising idea that needs more work. “In principle, it’s very powerful,” he says. “But there are many, many technical challenges.”

Still, as Goodman notes, Pyro and Edward are also significant for bringing together two competing schools in AI, one focused on neural networks and the other on probability.

In recent years, the neural-network school has been so dominant that other ideas have been all but left behind. To move forward, the field may need to embrace these other ideas.

“The interesting story here is that you don’t have to think of these camps as separate,” Goodman says. “They can come together—in fact, they are coming together—in the tools that we are now building.”

You might even say they are getting smarter, in part, by learning what they don’t know.

latest video

news via inbox

Nulla turp dis cursus. Integer liberos euismod pretium faucibua